The number of spine joints in this example is pretty low purely to reduce the amount of connection explanations I have to type. It should be fairly easy to take this and construct the it over more joints. Normally I'd be working with at least one extra joint, sometimes more, and this really improves the deformation quality in non-stretchy mode but particularly when it is stretching. There are also some real limitations to how far you can go with squash & stretch setups for a game engine which I'll get to later...

First, add a float attribute to torso_ctrl called stretchy with min/max values of 0 and 10. Really you can use whatever you feel appropriate here, I tend to use 0-10 simply because it gives a much finer level of control than 0-1.

Open up the Hypershade and drag torso_ctrl into the work area. Also drag in spinePos_grp_01, 02 and 03.

Create two distance between nodes and call them driver_spine_dist_01 and driver_spine_dist_02.

Connect spinePos_grp_01.translate to driver_spine_dist_01.point1 and spinePos_grp_02.translate to driver_spine_dist_01.point2

Connect spinePos_grp_02.translate to driver_spine_dist_02.point1 and spinePos_grp_03.translate to driver_spine_dist_02.point2

The easiest way to connect to a distance between node is using the connection editor. For some reason the attributes you want to connect to don't appear when you right click on the the input triangle in the Hypershade.

The graph should look something like this:

So, now we have two nodes that are tracking the length between three points. This will give us the target length of the joints for squashing/stretching along the nurbs surface. We also need to take note of the default/rest length so we can blend between stretchy/non-stretchy behaviour types.

Create a Blend Colors node called spine_stretch_blend. This will take two translate values, one stretchy and one default/rest, and give us an output value that is a blend of the two. The blend point is determined by a 0-1 attribute called blender and will be driven by the stretchy attribute on torso_ctrl. By using a Blend Colors node in this way we can provide a smooth blend between stretch and non-stretchy rather than a simple on/off switch.

Connect driver_spine_dist_01.distance to spine_stretch_blend.color1R and driver_spine_dist_02.distance to spine_stretch_blend.color1G.

Select spine_stretch_blend and take note of the value of .color1R. Add a float attribute to driver_spine_dist_01 called defaultLen. Set the default value of this attribute to the current value of spine_stretch_blend.color1R.

Add the same defaultLen attribute to driver_spine_dist_02, this time setting the default value to that of spine_stretch_blend.color1G.

Connect driver_spine_dist_01.defaultLen to spine_stretch_blend.color2R.

Connect driver_spine_dist_02.defaultLen to spine_stretch_blend.color2G.

So, now we need to hook up the blend point to our custom attribute on torso_ctrl. This goes from 0-1, but our custom attribute is from 0-10 so we need to remap this value. You can use either a multiplyDivide node to do this, or as I'm going to here a Remap Value node called spine_stretchy_remap.

Open up the attribute editor for spine_stretchy_remap and set InputMax to 10. Everything else can be left at default.

Connect torso_ctrl.stretchy to spine_stretchy_remap.inputValue.

Connect spine_stretchy_remap.outValue to spine_stretch_blend.blender.

The final step (kind of...) is to hook the output of the Blend Colors node to the translateY attributes of the spine driver joints.

Connect spine_stretch_blend.outputR to driver_spine_02.translateY.

Connect spine_stretch_blend.outputG to driver_sternum.translateY.

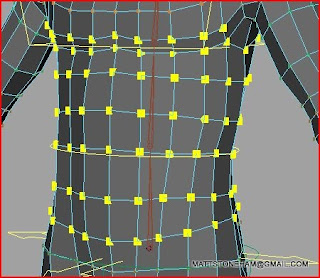

So this is a capture of what we should have so far:

You'll immediately notice that while the spine does indeed squash & stretch along its length there is no bulging/thinning of the torso. This is one of the perennial issues with rigging characters for games. If you've read some of my earlier posts or work in the industry you'll know that almost all game engines do not support joint scaling. It's easy to set up a stretch along a joint length, as long as you have enough joints you can get away with modifying the translation for almost the same effect as we have done here.

On current and previous projects stretchy spines have been used but only to help exaggerate what is essentially humanoid movement and for this style of animation the current set up is more than sufficient.

The best option to achieve proper cartoon-level squash & stretch for a games engine, including bulging/thinning the mesh as the spine length changes, would be to use a couple of blend shapes driven by the overall spine length on top of this set up.

Anyway, for the majority of people that aren't concerned with the limitations of game engines the squash & stretch in its current form is basically unfinished. Also, if I get permission to use some assets from Enslaved I'm planning a pretty lengthy post on how we used corrective blend shapes for muscle deformation so I don't really want to get into that here.

So enough rambling, lets add this in using a lattice and some clusters. I'd just like to say at this point that lattice deformers are brilliant. I dunno, if I ever do some non-games work I might actually get to use them a bit more often...

First off, make a vertex selection that is basically the area that you want the volume to change in as the spine length changes.

Create a Lattice deformer. Here I've created a fairly low resolution one just so set up is quicker (also the mesh is quite low resolution, so a higher res lattice won't give me much benefit), but you might want to use a more complex lattice to give you finer control over the deformation effect it has on the mesh.

You now need to change the input order of your deformers onto the geometry. Currently you have a skinCluster input and a lattice input. The order in which these are evaluated has a dramatic effect on how the mesh deforms when multiple deformers are acting on a single mesh. Generally there is 'correct' behaviour and 'completely messed up' behaviour, so you'll know if your orders are right or not. As a general rule-of-thumb you want your skinCluster deformer to be evaluated last.

So, to ensure this is the case right click on the mesh, go to inputs and then all inputs. You should see this window:

So here you can see the order of evaluation. Currently Ffd (the lattice) is last to be evaluated. Using the middle mouse button, drag the FFd input over the top of Skin Cluster. They should switch positions and look like this:

Marvellous. Now to create some clusters on the lattice. We will use driven keys to drive the scale (and probably tweak translation/rotation too) of the clusters which will deform the lattice, and therefore our skinned geometry, as the spine length changes.

Here I've created one cluster per row of verts, you can see the vert selection for the top cluster.

I now need two values; 1) The total default length of the spine, and 2) The total current length. I'll then divide one from the other which will give me a value (essentially a scale value, where 1 is at default length and 2 is twice as long) I can use to drive the clusters.

Create two plusMinusAverage nodes called total_defaultLen and total_len.

Connect driver_spine_dist_01.defaultLen to total_defaultLen.input1D[0]

Connect driver_spine_dist_02.defaultLen to total_defaultLen.input1D[1]

Connect driver_spine_02.translateY to total_len.input1D[0]

Connect driver_sternum.translateY to total_len.input1D[1]

Create a multiplyDivide node called total_len_div and set operation to Divide.

Connect total_len.output1D to total_len_div.input1X

Connect total_defaultLen .output1D to total_len_div.input2X

Your Hypershade should look like this:

The outputX attribute of total_len_div is what we will use to drive the clusters. The easiest way of doing this is through driven keys. Load this and your clusters into the Set Driven Key tool.

First, make sure torso_ctrl.stretchy is set to 10. Set a key with everything in the default position.

Translate torso_ctrl down and scale/translate/rotate the clusters to create a bulge. Set a key.

Translate torso_ctrl up and modify the cluster scale/translate and rotates again to create a thinner shape. Set a key again.

And here is a capture of the whole thing working (right at the end I'm changing the stretchy attribute on torso_ctrl):

The mesh I'm using is extremely low resolution, even by games standards, so you should expect a much higher quality result than you see here by using a 'final' mesh. You'd also want to use a higher resolution lattice deformer too.

Matt.

Great post, I learnt a lot!

ReplyDeleteThanks!

-Dan

This is great stuff man, thanks so much for sharing. Learning loads:)

ReplyDeletedoes unreal game engine support corrective blendshapes ?? . How can we use this technique for game engine. Thanks very much for sharing

ReplyDeleteHey,

ReplyDeleteYes you can use corrective blend shapes in Unreal Engine using the morph target system.

In fact we did exactly that for the character Monkey in Enslaved. We had 48 face shapes and 8 body corrective shapes (left and right shoulder up/shoulder forward/shoulder back/arm forward).

Setting it up sort of depends on your studio/personal pipeline. For example, as we use Morpheme Connect as our animation blend/state machine we drive morph targets in game using joint translations. If you are using the animTree system in Unreal you don't need to do this as morph target tracks are supported to animate the shapes directly.

What should be common to most, if not all pipelines is that the shapes and all the systems that drive them should be fully set up in Maya/Max so that when you export the animation (normally just joints) you are also exporting the animation for the shapes themselves.

So for example you could have a shape that corrects the shape of the Latissimus Dorsi muscle as the shoulders raise, and this would be connected to your shoulder joint rotations in Maya.

You can then export the whole lot using FBX as your interchange format. When you import the FBX into Unreal for the first time it creates the skeletalMesh, you can also opt to import blend shapes and animation. Unreal *should* automatically create morph target animation tracks for you to animate the shapes as they were in Maya.

The FBX pipeline is slightly hit & miss at the moment as it's still under heavy development, plus this method is not one we use in our studio. With that in mind, please consider this an outline of the general principle rather than absolute instructions. Make sure you have the most recent Maya FBX plugins and a recent build of Unreal and you should hopefully avoid too many issues.

Hey, I don't know if you know about this, but rather than creating a Distance between node, I like to use a CV curve and if you run the mel "arclen -ch 1" you can turn on it's channel info node which will give you the length of the curve. That way if I have an IKSpline on there, I make make my own spline I know the length of.

ReplyDeleteGreat explanation, Matt, I was unsure if I'm "allowed" to alter the transform of bones instead of scaling them like people suggested in other tutorials. But your article removed my fear :)

ReplyDeleteOne thing I'd be interested in is: Can you have a falloff between your lattice and the rest of the geometry and how? I know there are options to set, when you initially create the lattice, but those seem to have no effect on points I have not selected, when I created it earlier?!

How do you get the lattice information into the game engine?

ReplyDelete